Whenever Google comes out with a new webmaster guideline, I wonder what they are up to. Every action they take has the potential to aide or disrupt the normal flow of the internet that we all rely on now. Take, for example, the announcement of their mobile-friendliness ranking factor and the potential for disruption that #mobilegeddon might cause three weeks from now...

Is #mobilegeddon really for the benefit of users, or does Google have a larger plan? I have a feeling it relates back to lowering the carbon footprint of your website as well.

Simple Ranking Factors

Unlike #mobilegeddon, most ranking factors have a small effect on a website. Rich Snippets and Semantic SEO are ranking factors that Google recommends, but few people implement due to their complexity. It's even more difficult to measure the short term effectiveness of those Rich Snippets.

Rebuilding a Website with Rich Snippets

During the summer of 2014, I had the opportunity to rebuild a website that had a lot of blog posts. The rebuilding process included an update to the HTML code and a meticulous incorporation of several semantic SEO methods explained on Schema.org. It took more than 40 hours of programming and testing to get the schema microdata to work and be recognized by Google.

Relaunch and Loss

The rebuilt site was launched in early September 2014 with high expectations. With all that extra programming time and cost, I had hoped to measure the search engine traffic improvements right away, but Google doesn't work like that.

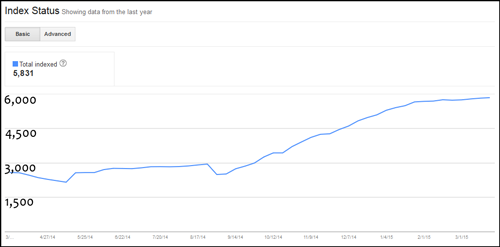

This chart shows what happened to the website within 45 days of the relaunch:

There was a large drop in the number of indexed pages as Google went through the new design rediscovery process. From a website owner's point of view that initial massive drop is disastrous, and it has been the reason many agencies have been hastily fired.

Back to Square One

But you can see that the website had regained its indexed page count by September 28, 2014. Then in mid-October there's a short plateau on the chart that corresponds to the time when Google rolled out their Penguin 3.0 update. Considering the number of blogs on this website it's quite possible that it was affected by Penguin update in some way.

The Google Penguin filter influences how pages link to each other and if they are using spammy practices for that linking. While the individual blog site I'm presenting here wasn't itself affected by Penguin, other blogs and forums that linked to it probably were.

Is Microdata Worth the Effort?

Using microdata on your website is supposed to make your website more understandable by search engines. Websites that are easier to understand should require less processing time to figure out, and therefore lowers the amount of energy Google uses to index your site. This ultimately leads to Google indexing more pages on your site.

Keep in mind that indexing your website is not the same as ranking. Indexing is the process of digesting the information on your website and storing it in the search engine database. How high or low your web pages appear in the search results relates to how well your content matches a search query.

However, having more pages indexed also means you have a greater chance of matching a wider variety of search queries. Implementing microdata is a means to an end, but like I said, it's a very difficult process to get through.

Ultimate Results

Now that 7 months have passed, it's easier to see how the redesign and microdata helped improve the number of pages indexed on this site. Here's the full chart showing the last 12 months:

As you can see, the number of indexed pages was hovering just below 3,000 for a long time. Different Google updates had even knocked it down a few times. This chart was pulled from Google Webmaster Tools.

I'd like to point out that the redesign of the site was only a change of the HTML code. The content and the URLs all remained the same.

It seems like Google has finally completed the full indexing of all the new microdata, and we've been able to find the individual blogs appearing more frequently in search for targeted search queries.

The site doesn't actually have 5,831 pages as reported in the report. That number includes how Googlebot is using the website's built in search feature to dig for different combinations of results.

TL;DR

Rich Snippets and Semantic SEO using Schema.org microdata formats will help your website establish a larger footprint inside Google's index, but it doesn't specifically improve search results. Positive results from microdata take a really long time to see in any report, and they require a leap of faith for a website owner to invest in.