According to Wikipedia, uptime "is a measure of the time a machine, typically a computer, has been working and available."

Interestingly enough, the Wikipedia page only explains Uptime in relation to the physical computer servers that run just about everything in the world now, but not about websites.

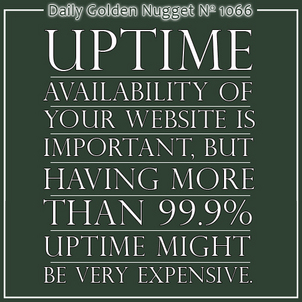

Last week I mentioned uptime in my Nugget about organic traffic loss but then realized I needed to expand on that topic, so here we go...

Home users usually never hear about Uptime from their telephone, cable, or internet company. Home users usually pay a low price for service and have to take what they are given, which sometimes means your service might go out when it's raining.

Business users are usually more demanding because any loss in telephone, cable, or internet service means a loss in business. Essentially, the communications company has real control over a business' ability to make money and the low grade service provided to home users is simply not adequate for business purposes.

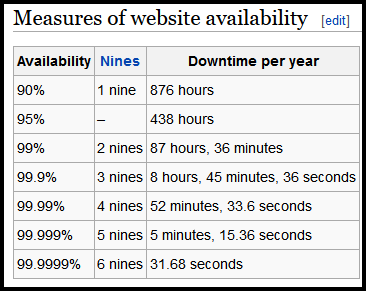

This is where the Uptime becomes important, and something called an Uptime Guarantee. An Uptime Guarantee is usually explained as "availability" and is measured in a percentage of time the service is available. Typically those percentages have lots of "9's" in them, as in "two 9's of uptime," or "four 9's of uptime," or the coveted "six 9's of uptime."

Here's the "Measure of website availability" from Wikipedia:

click to view on Wikipedia

As you can see, the 99.9% (that's three 9's) availability refers to an outage of 8 hours, 45 minutes per year. This is the total outage for the service, not simply a single outage. This is probably the level of service you would pay for in your office. At this level, your service will probably go out when the power goes out, therefore you wouldn't be able to work anyway.

The 99.99% (that's four 9's) availability refers to a total outage of 52 minutes, 33 seconds per year. This will be a more expensive service because the outage needs to have protection against power failures. In some cases that means batter backups, and sometimes that means backup generators. With four 9's the only outages should be related to administrative or physical equipment upgrades. Companies who offer 99.99% uptime need to have 24/7 monitoring on their service with fast plans of action.

As you might be able to guess, with only 5 minutes of allowed downtime, the 99.999% uptime is very difficult to achieve. This requires a service that has generator and battery backup, redundant equipment that is active all the time, and multiple connections to the internet through different internet providers. This is the type of service that banks and governments need to rely on.

Snapping back to reality let me explain how Uptime and availability plays into your website.

Google uses a software program to read hundreds of millions of web pages every day. This software program is often called a "spider" because it "crawls" through your "web" pages. Officially this software program is called "Googlebot."

Every company has their own "bot" program, in fact, there's a Bingbot, a Yahoobot, an Alexabot, and each social network has their own bot as well. Although it's interesting to know that these bots exist, only the Googlebot is ubiquitous enough to see your website outages.

Odds are that Googlebot will visit a business website at least once per day. During the visit the bot will look for your robots.txt file and test a few pages of your site.

It will take action against your SERP ranking like I explained the other day if pages are missing, and it records those missing pages in your Webmaster Tools account.

Server Uptime issues are recorded as "Server connectivity" problems in Webmaster Tools where it reports different types of connection failures.

The page errors and server errors are shown on the Crawl Errors page of your Webmaster Tools account. Although you might not check this page very often, Google realizes it is of paramount importance so they show error messages on your main Webmaster Tools Home screen.

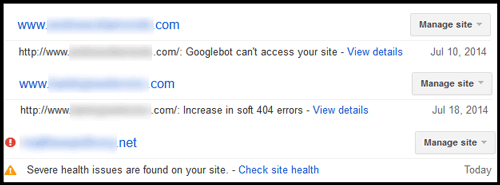

Here's a snapshot of a few recent messages appearing on my own Home screen:

There are 3 types of errors shown above:

1. Googlebot can't access your site

2. Increase in soft 404 errors

3. Server health issues are found on your site.

Let me break these down...

1. Googlebot can't access your site

Googlebot might not be able to reach your website because of:

* server issues, like hard drive failures

* internet outages

* database errors on your website

* DNS misconfigurations

* expired domain names

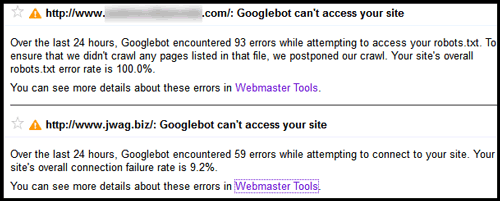

Here's the types of messages you'll see when clicking on "View details" link on your home screen:

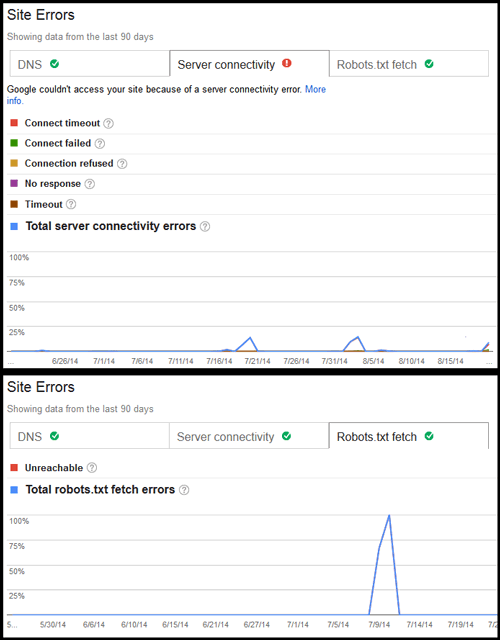

Those error messages don't have a lot of detail but clicking on the "Webmaster Tools" link will bring you to these graphs showing when the errors occurred:

The top chart in the above image shows Server connectivity errors. Those server connectivity errors only every occur once every other year or so, and usually only when your web server is turned off. Ironically, a 45 minute power failure in my own server room took the jWAG website offline long enough for Googebot to notice, and generate that report. Great timing for me to document it here.

The Server connectivity report shows connect timeouts, connect failures, connections refused, no response, and normal timeouts. The report doesn't show why these errors occurred, but I know it was due to a power failure. You would have to call your web host (not your web programmer) to find out what happened here. You can't use Webmaster Tools as a real time monitoring service because this report doesn't appear until 24 hours after the fact.

On the lower part of the above image you'll see the Robots.txt fetch errors. Over the years, I've noticed Googlebot reporting robots.txt fetch errors even though the server host didn't have any outages reported in their logs. I usually attribute these problems to internet bottlenecks and server response time. Googlebot needs to crawl hundreds of millions of other pages every day and it can't afford to wait even a nanosecond for your website to catch up.

I know it's time to move to a new web server when I see the number of robots.txt fetch errors on the rise.

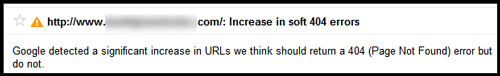

2. Increase in soft 404 errors

The increase in soft 404 errors is an interesting one and I'm glad I can detail it for you. Here's what the full error message says when you open it:

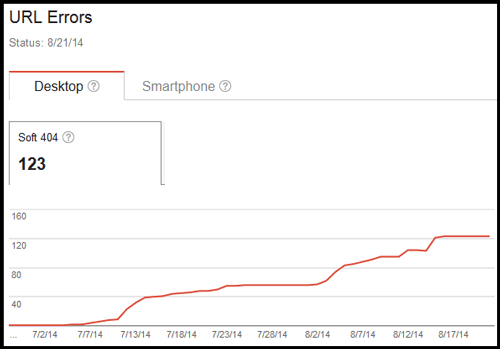

That message doesn't really explain what's happening either so you need to look navigate over to Crawl > Crawl Errors > URL Errors in Webmaster Tools. That screen will show you a chart and the pages producing the soft 404 error.

Here's what the chart looks like:

A "soft 404" is what Google refers to as a web page that doesn't produce the same information that it once did, and it now displays an error message. This actually happens a lot with online product catalogs, and it's the way that most catalogs function.

When you remove a product from your website, the online catalog software should generate some type of "product not available any more" message for people to see if they stumble across the page. People will stumble across that missing product, especially if it was shared to Facebook or Pinterest.

Googlebot reports those "product not available any more" messages as soft 404s, and you'll see these a lot if you update your online catalog often.

Is there a way to prevent the soft 404s? Sure, but be prepared for your website programmer to smack you with a big programming bill to do it.

3. Server health issues are found on your site.

This last error I can explain for today is about your server health. Personally, I find this a little confusing since server health to me refers to Uptime, but not for Googlebot.

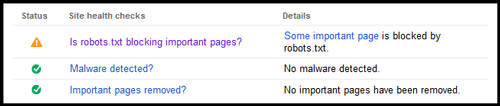

When clicking on the "Check site health" link shown in the image up above you will see this popup message:

The message give you a 3 point status about your "Site health" which includes information about files being blocked in robots.txt, if your website is infected with malware, and if pages of your site have been removed using the Remove URL tool.

In this particular situation, the error reported here is telling me that I should look at my robots.txt file. What I find there is that the entire website is being blocked from Google, but it was blocked for a reason.

This website is under development and we don't want Google to read it until it's ready to launch, so it was completely blocked using the robots.txt file. Once the site is ready to launch we'll remove that block as explained in this Nugget.

As you can see, Google reports the Uptime of your website in various ways. I've only documented the ways available to me today. Your website's uptime is dependent on hardware factors, power issues, programming bugs, and a lot of human involvement.

You can always have "more 9's" in the uptime availability of your website, but every level becomes a lot more expensive.