Sometimes Google is sneaky.

Sometimes Google is sneaky.In their quest to find all there is to find, Google will follow every link on your website inside out, upside down, and backwards until they've read all your web pages, online documents, and video files. But they don't just stop there.

The Google indexing process will also look at all the URLs that make your website work and it will experiment with different permutations just to see if they can expose hidden details about your website. From Google's point of view if these experimentations return a real page, they will save and index it.

That's where the sneaky part comes in; and because of this Google is actually causing your website to produce duplicate content.

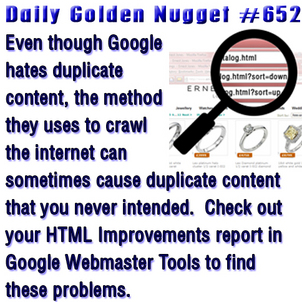

Here's a simple example using an online catalog page called /online-catalog.html.

To sort products, you would click a link and see this in your address bar: /online-catalog.html?sort=up

You could sort your products in the other direction and then see this: /online-catalog.html?sort=down

The only real page here is /online-catalog.html but Google sees the other two "sort" pages as "unique" pages instead of simple permutations of the same page. Because of this, Google's automated system might penalize you for having duplicate content on your website.

This is a simple example that can quickly expand to a much larger issue when browsing a product catalog. If your online product catalog is incorrectly programmed, you could easily confuse Google into thinking you have thousands of product pages when you really only have 100.

Do you have a contact form on your website? The Googlebot is also sneaky with online forms and will try to fill out random information to see if it can expose other hidden information. Have you ever received gibberish from your Contact Us form? Usually that gibberish is a hacking attempt, but it might simply be Googlebot. You can usually prevent the gibberish emails by protecting your online forms with a CAPTCHA.

As part of your website's search engine optimization process you should clean up as many of these accidental duplicate pages as possible. The easiest way to find them is by looking inside your Google Webmaster Tools account.

One inside your GWT account you need to look into the area for "Optimization" and "HTML Improvements." This is the same report I mentioned in the previous Daily Nugget, it shows all the pages with duplicate meta descriptions and duplicate title tags. As you drill down into this report you will probably see identical URLs with different variable strings, and that's Google being sneaky!

There are two ways to clean up this problem. The first would require reprogramming your pages to return 404 Error pages when incorrect variable strings are entered. The second way is to use the filtering inside Webmaster Tools to ask Google to ignore those variable strings.

You should talk to your website programmer or SEO professional to find out which method is more effective for you.

This type of "Google induced" duplicate content seems to plague all websites no matter how hard you try to prevent it. Even though Google causes it, you still need to protect against it to insure your best possible ranking.